Configuring a HA K3s cluster

Our K3s HA setup consists of 4 homogeneous nodes (3 master nodes + 1 worker node) and can withstand a single-node failure with a very short failover disruption (between 3 to 6 minutes).

With our HA setup we can achieve a monthly uptime of 99.9% (a maximum of 43m of downtime every month).

Prerequisites

Refer to K3S installation for the node specs of the product you’ll be installing.

Networking

Internal

We recommend running the K3s nodes in a 10Gb low latency private network for the maximum security and performance.

| Protocol | Port | Description |

|---|---|---|

| TCP | 6443 | Kubernetes API Server |

| UDP | 8472 | Required for Flannel VXLAN |

| TCP | 2379-2380 | embedded etcd |

| TCP | 10250 | metrics-server for HPA |

| TCP | 9796 | Prometheus node exporter |

- Domain Name Service (DNS) configured

- Network Time Protocol (NTP) configured

- Software Update Service - access to a network-based repository for software update packages

- Fixed private IPv4 address

- Globally unique node name (use

--node-namewhen installing K3s in a VM to set a static node name)

External

The following port must be publicly exposed in order to allow users to access Forcepoint DSPM:

| Protocol | Port | Description |

|---|---|---|

| TCP | 443 | FDC backend |

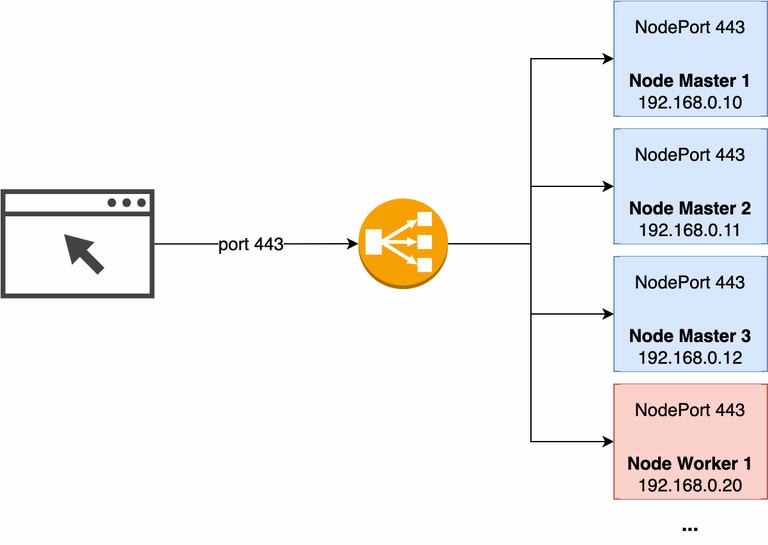

The user must not access the K3s nodes directly, instead, there should be a load balancer sitting between the end user and all the K3s nodes (master and worker nodes):

The load balancer must operate at Layer 4 of the OSI model and listen for connections on port 443. After the load balancer receives a connection request, it selects a target from the target group (which can be any of the master or worker nodes in the cluster) and then attempt to open a TCP connection to the selected target (node) on port 443.

The load balancer must have health checks enabled which are used to monitor the health of the registered targets (nodes in the cluster) so that the load balancer can send requests to healthy nodes only.

- Timeout: 10 seconds

- Healthy threshold: 3 consecutive health check successes

- Unhealthy threshold: 3 consecutive health check failures

- Interval: 30 seconds

- Balance mode: round robin

Public

Refer to Estimate hardware capacity needs for the list of URLs you need to enable in your corporate proxy in order to connect to our private registries.